The Central Processing Unit (CPU) is often dubbed the heart of a computer, orchestrating various tasks and ensuring harmonious operation. A critical component of modern CPUs is the CPU cache. This small yet mighty feature substantially influences the overall performance of a computing system, primarily by reducing the time required to access data and instructions. To thoroughly comprehend the implication of CPU cache, let’s delve into what it is and how it impacts performance.

What is CPU Cache?

A CPU cache is a specialized form of high-speed memory located within the CPU itself. Unlike the main memory (RAM), which offers more storage but slower access times, the cache is designed to store frequently accessed data and instructions. The presence of this cache allows the CPU to bypass slower main memory in certain instances, leading to substantial performance gains.

Types of CPU Cache

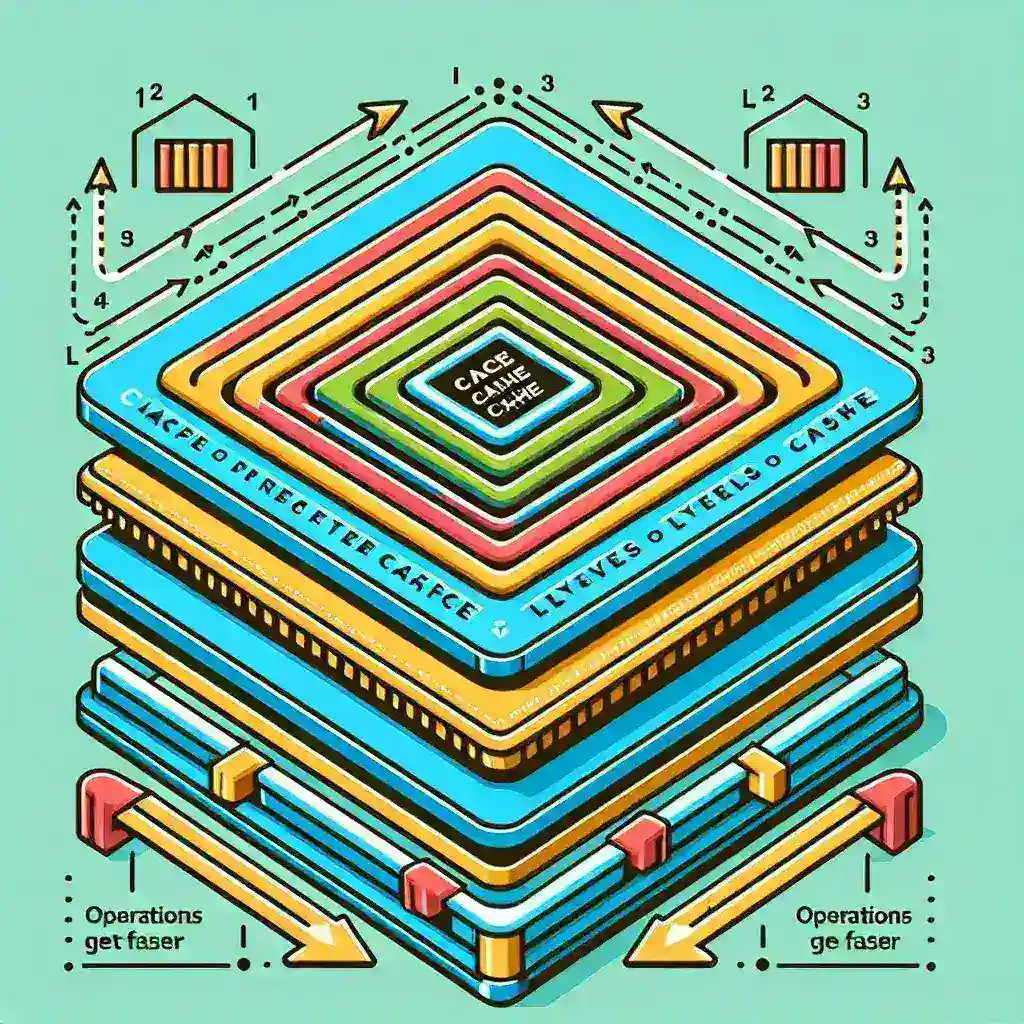

There are three primary levels of CPU cache, commonly referred to as L1, L2, and L3. These levels differ in size, speed, and proximity to the CPU cores.

| Cache Level | Size | Speed | Location |

|---|---|---|---|

| L1 Cache | 16-64 KB | Fastest | Within CPU core |

| L2 Cache | 256 KB-8 MB | Fast | On the CPU |

| L3 Cache | 4-50 MB | Least Fast | Shared among cores |

L1 Cache

The Level 1 (L1) cache is the smallest and fastest of the three. It is often split into two separate caches: one for data (L1d) and one for instructions (L1i). It resides directly within the CPU core, ensuring minimal latency.

L2 Cache

The Level 2 (L2) cache is larger than L1 but slower. However, it remains significantly faster than accessing the main memory. L2 cache plays a key role in feeding the L1 cache and is usually either integrated directly into the CPU core or located adjacent to it.

L3 Cache

The Level 3 (L3) cache is the largest and slowest among these three but is still faster than main memory. L3 cache is often shared among multiple CPU cores, allowing for more efficient data access across the entire CPU.

How CPU Cache Impacts Performance

The presence of CPU cache greatly impacts the performance of a computing system, mainly through several key mechanisms:

- Reduced Latency: CPU cache reduces the time it takes for the CPU to access frequently used data and instructions. Lower latency translates to quicker response times and overall faster performance.

- Improved Throughput: By providing faster data access paths, CPU cache allows the CPU to process more instructions per cycle, thereby increasing overall throughput.

- Enhanced Multitasking: With larger L2 and L3 caches, multitasking environments see significant performance boosts as each core can retrieve data efficiently without bottlenecks.

Cache Misses and Their Impact

Cache misses occur when the CPU is unable to find the required data in the cache. There are three main types of cache misses:

- Cold Misses: These occur when data is accessed for the first time. As the cache has no prior record, it must fetch the data from main memory.

- Capacity Misses: These occur when the cache is full and cannot store any more data. Data is evicted to make space for new data.

- Conflict Misses: These occur when multiple data items compete for the same cache location, leading to frequent evictions.

Cache misses result in higher latency as the CPU has to fall back on slower memory to retrieve the data.

Techniques to Optimize CPU Cache Performance

To minimize the negative impacts of cache misses and maximize CPU cache efficiency, several optimization techniques can be employed:

Cache Prefetching

This technique involves predicting the data and instructions that the CPU will require shortly and loading them into the cache ahead of time. This proactive approach reduces the likelihood of cache misses.

Cache Blocking

Cache blocking, or loop blocking, involves restructuring data access patterns such that data blocks that are processed fit into the cache optimally. This technique is often used in scientific computing where large data sets are the norm.

Inclusive vs. Exclusive Cache Hierarchies

Some CPU designs incorporate inclusive cache hierarchies where data in L1 must also be in L2 and L3. Others use exclusive cache hierarchies where each level stores different parts of data. The choice between inclusive and exclusive hierarchies can impact how effectively the caches are utilized.

Conclusion

The CPU cache is an indispensable component of modern computing systems, offering a bridge between the high-speed CPU core and slower main memory. By understanding the types of cache, how they impact performance, and employing optimization techniques, one can significantly enhance the computing experience, achieving better response times, superior multitasking capabilities, and more efficient data handling. Whether you’re a casual computer user or an IT professional, knowledge of CPU cache’s role is critical for leveraging technology to its fullest potential.